As briefly discussed here, the presence of discontinuities negatively affects the accuracy and achieved order of convergence of finite difference (and other discretization) methods. In some cases the effect is so significant that one really needs to apply special treatment. One such case in finance is discretely monitored barrier option pricing. At each monitoring date the solution profile along the asset axis is flattened after the barrier (for knock-out type options), to zero or the rebate amount. Thankfully the treatment needed is very simple and consists in adjusting the value at the grid point that sits nearer to the barrier, to its average from half (grid) spacing to its left to half spacing to its right. We thus need to find the area under the solution using the known values at the nearest grid points. We can simply assume piecewise linear variation between adjacent grid values and so use the trapezoidal rule (I'll call that linear smoothing). Or if we want to be a little more sophisticated we can fit a 2nd order polynomial to the three nearest points and integrate using that (let's call that quadratic smoothing).

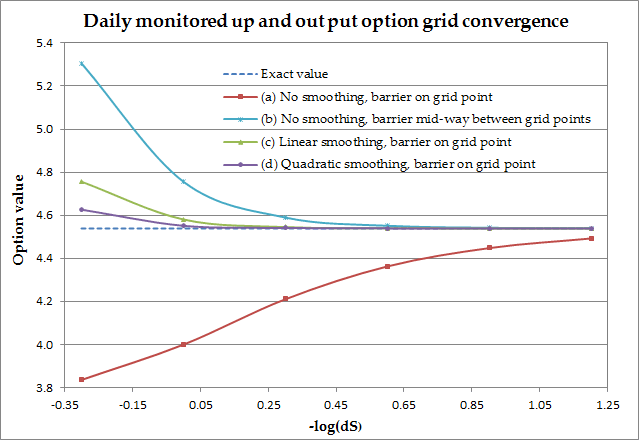

As an example let's try to price a daily monitored up and out put option. I'll use simple Black Scholes to demonstrate, but the qualitative behaviour would be very similar under other models. In order to show the effect clearly I'll start with a uniform grid. The discetization uses central differences and is thus second order in space (asset S) and Crank-Nicolson is used in time. That will sound alarming if you're aware of C-N's inherent inability to damp spurious oscillations caused by discontinuities, but a bit of Rannacher treatment will take care of that (see here). In either case in order to take time discretization out of the picture here, I used 50000 time steps for the results below so there's no time-error (no oscillation issues either) and thus the plotted error is purely due to the S-dicretization. The placement of grid points relative to a discontinuity has a significant effect on the result. Having a grid point falling exactly on the barrier will produce different behaviour as opposed to having the barrier falling mid-way between two grid points. So Figure 1 has the story. The exact value (4.53888216 in case someone's interested) was calculated on a very fine grid. It can be seen that the worst we can do is place a grid point on the barrier and solve with no smoothing. The error using the coarsest grid (which still has 55 points up to the strike and it's close to what we would maybe use in practice) is clearly unacceptable (15.5%). The best we can do without smoothing (averaging), is to make sure the barrier falls in the middle between two grid points. This can be seen to significantly reduce the error, but only once we've sufficiently refined the grid (curve (b)). We then see what can be achieved by placing the barrier on a grid point and smooth by averaging as described above. Curve (c) shows linear smoothing already greatly improves things again compared to the previous effort in curve (b). Finally curve (d) shows that quadratic smoothing can add some extra accuracy yet.

As an example let's try to price a daily monitored up and out put option. I'll use simple Black Scholes to demonstrate, but the qualitative behaviour would be very similar under other models. In order to show the effect clearly I'll start with a uniform grid. The discetization uses central differences and is thus second order in space (asset S) and Crank-Nicolson is used in time. That will sound alarming if you're aware of C-N's inherent inability to damp spurious oscillations caused by discontinuities, but a bit of Rannacher treatment will take care of that (see here). In either case in order to take time discretization out of the picture here, I used 50000 time steps for the results below so there's no time-error (no oscillation issues either) and thus the plotted error is purely due to the S-dicretization. The placement of grid points relative to a discontinuity has a significant effect on the result. Having a grid point falling exactly on the barrier will produce different behaviour as opposed to having the barrier falling mid-way between two grid points. So Figure 1 has the story. The exact value (4.53888216 in case someone's interested) was calculated on a very fine grid. It can be seen that the worst we can do is place a grid point on the barrier and solve with no smoothing. The error using the coarsest grid (which still has 55 points up to the strike and it's close to what we would maybe use in practice) is clearly unacceptable (15.5%). The best we can do without smoothing (averaging), is to make sure the barrier falls in the middle between two grid points. This can be seen to significantly reduce the error, but only once we've sufficiently refined the grid (curve (b)). We then see what can be achieved by placing the barrier on a grid point and smooth by averaging as described above. Curve (c) shows linear smoothing already greatly improves things again compared to the previous effort in curve (b). Finally curve (d) shows that quadratic smoothing can add some extra accuracy yet.

Figure 1. Solution grid convergence for a European, daily monitored up and out put, using uniform grid in S.

S = 98, K = 110, B = 100, T = 0.25, vol = 0.16, r = 0.03, 63 equi-spaced monitoring dates (last one at T)

S = 98, K = 110, B = 100, T = 0.25, vol = 0.16, r = 0.03, 63 equi-spaced monitoring dates (last one at T)

This case is also one which greatly benefits from the use of a non-uniform grid which concentrates more points near the discontinuity. The slides below show exactly that. Quadratic smoothing with the barrier on a grid point is used. First is the solution error plot for a uniform grid with of dS = 2, which corresponds to the first point from the left of curve (d) in figure 1. The second slide shows the error we get when a non-uniform grid of the same size (and hence same execution cost) is used. The error curve has been pretty much flattened even on this coarsest grid. The error now has gone from 15.5% on a unifom grid with no smoothing, down to 0.01% on a graded grid with smoothing applied, for the same computational effort. Job done. Here's the function I used for it: SmoothOperator.cpp.

RSS Feed

RSS Feed