When it comes to stochastic volatility (SV) models (whether in their pure form, or typically in combination with local volatility and/or jumps), their adoption in practice has been largely driven by whether they offer an "analytical" solution for vanilla options. The popularity of the Heston model probably best exemplifies this. When such a solution doesn't exist, calibration (to the vanilla option market) is considered more difficult and time consuming. But what are the chances that a model that happens to have a (semi-) analytical solution is also the best for the job? It could happen of course, but obviously this isn't the right criterion when choosing the model. Another model may well fit the market's implied volatility surfaces better and describe its dynamics more realistically.

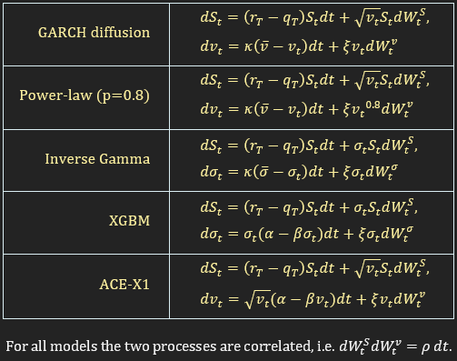

In my last couple of posts I spoke about how a fairly simple PDE / finite differences approach can actually enable fast and robust option calibrations of non-affine SV models. I also posted a little console app that demonstrates the approach for the GARCH diffusion model. I have since played around with that app a little more, so here I'm giving a second version that can calibrate the following 5 non-affine SV models (plus Heston for comparison).

In my last couple of posts I spoke about how a fairly simple PDE / finite differences approach can actually enable fast and robust option calibrations of non-affine SV models. I also posted a little console app that demonstrates the approach for the GARCH diffusion model. I have since played around with that app a little more, so here I'm giving a second version that can calibrate the following 5 non-affine SV models (plus Heston for comparison).

For the GARCH diffusion or power-law model (and the PDE pricing engine used for all the models implemented in this demo) see [1]. For the Inverse Gamma (aka "Bloomberg") model see for example [2]. The XGBM model was suggested to me by Alan Lewis, who has been working on an exact solution for it (to be published soon). Here pricing is done via the PDE engine. The ACE-X1 model is one of the models I've tried calibrating, which seems to be doing a very good job in various market conditions. All the above models calibrate to a variance (or volatility) process that is arguably more realistic than that of the Heston model (which when calibrated, very often has zero as its most probable value in the long-run).

Please bear in mind that the above demo is just that, i.e. not production-level. In [1] the PDE engine was developed just for the GARCH diffusion model and Excel was used for the optimization. I then quickly coupled the code with a Levenberg-Marquardt implementation and adjusted the PDE engine for the other models without much testing (or specific optimizations). That said, it works pretty well in general, with calibration times ranging from a few seconds up to a minute. It offers three speed / accuracy settings, but even with the fastest setting calibrations should be more accurate (and much faster) than any Monte-Carlo based implementation. A production version for a chosen model would be many times faster still. Note that you will also need to download the VC++ Redistributable for VS2013. The 64-bit version (which is a little faster) also requires the installation of Intel's MKL library. The demo is free but please acknowledge if you use it and do share your findings.

EDIT April 2020: After downloading and running this on my new Windows 10 laptop I saw that the console was not displaying the inputs as intended (it was empty). To get around this please right click on the top of the console window, then click on Properties and there check "Use legacy console". Then close the console and re-launch.

EDIT April 2020: After downloading and running this on my new Windows 10 laptop I saw that the console was not displaying the inputs as intended (it was empty). To get around this please right click on the top of the console window, then click on Properties and there check "Use legacy console". Then close the console and re-launch.

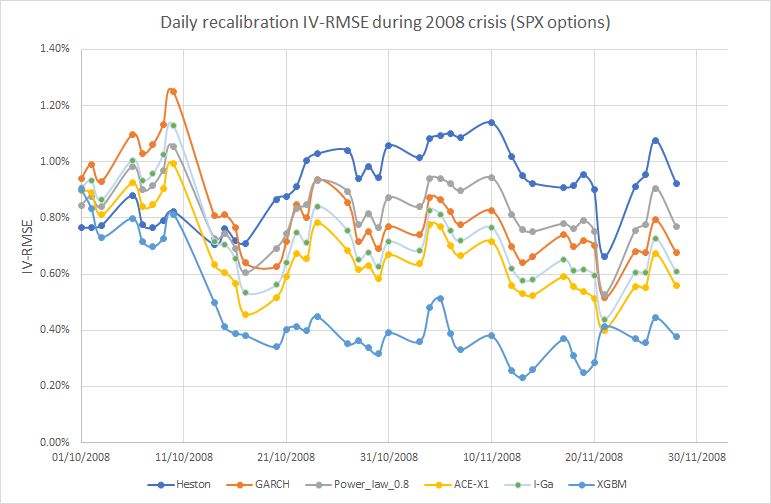

As a small teaser, here's the performance of these models (in terms of IV-RMSE, recalibrated every day) for SPX options during two months at the height of the 2008 crisis. Please note that I used option data (from www.math.ku.dk/~rolf/Svend/) which include options +- 30% in moneyness only. Expirations range from 1M to 3Y. Including further out-of-the-money options does change the relative performance of the models. As does picking a different market period. That being said, my tests so far show that the considered models produce better IV fits than Heston in most cases, as well as representing arguably more realistic dynamics. Therefore they seem to be better candidates to be combined with local volatility and/or jumps than Heston.

| Heston | GARCH | Power_law_0.8 | ACE-X1 | I-Ga | XGBM | |

| average IV-RMS | 0.91% | 0.81% | 0.82% | 0.66% | 0.73% | 0.45% |

References

[1] Y. Papadopoulos, A. Lewis, “A First Option Calibration of the GARCH Diffusion Model by a PDE Method.,” arXiv:1801.06141v1 [q-fin.CP], 2018.

[2] N. Langrené, G. Lee and Z. Zili, “Switching to non-affine stochastic volatility: A closed-form expansion for the Inverse Gamma model,” arXiv:1507.02847v2 [q-fin.CP], 2016.

[2] N. Langrené, G. Lee and Z. Zili, “Switching to non-affine stochastic volatility: A closed-form expansion for the Inverse Gamma model,” arXiv:1507.02847v2 [q-fin.CP], 2016.

RSS Feed

RSS Feed